The role of causal analysis in big data era

The advent of big data not only introduces new challenges but also offers novel opportunities for enhancing causal analysis. Image generated by AI

With the rapid advancement of computational social science methodologies, the integration of digital technologies and large-scale models into both academic research and business applications has become increasingly prevalent. A common perspective suggests that big data-driven analytical approaches primarily rely on correlation, leading to the argument that, in the era of big data, the pursuit of causality should be abandoned in favor of more accessible and computationally efficient correlational analyses. While this viewpoint appears plausible, it is ultimately reductive and flawed. Regardless of the era, both correlation and causation remain fundamental objectives of scientific inquiry. The advent of big data not only introduces new challenges but also offers novel opportunities for enhancing causal analysis.

Importance of causal explanations

From a research objective standpoint, social science inquiry can be broadly categorized into three types: descriptive, explanatory, and predictive research. Descriptive research seeks to answer the question of “what is,” often employing representative survey data or large-scale datasets to characterize phenomena, identify trends, and analyze correlations across spatial and temporal dimensions. Explanatory research addresses the question of “why,” utilizing statistical methods and causal inference techniques to elucidate relationships between variables and uncover the underlying mechanisms driving processes. Predictive research focuses on “what will happen in the future,” drawing on historical data to identify underlying principles and patterns and forecast future developments or event probabilities. Within this framework, contemporary big data research predominantly emphasizes description and prediction. However, this does not imply that causal explanation is irrelevant.

Causal explanations help distinguish genuine relationships from misleading correlations. While descriptive big data studies can identify numerous correlations and patterns, these associations and patterns may be spurious or lack substantive significance. For instance, an analysis of health-related big data might reveal a positive correlation between ice cream sales and the number of individuals suffering from heat stroke. Without causal reasoning, one might erroneously conclude that consuming ice cream causes heat stroke. In reality, both phenomena are driven by high temperatures. Causal analysis allows researchers to identify the true underlying factor—temperature—thereby preventing misleading conclusions.

Furthermore, while descriptive studies can map out the surface-level characteristics of a phenomenon, causal explanations provide deeper insights by identifying the underlying mechanisms behind observed patterns. For example, educational big data may show a negative correlation between students’ use of electronic devices and academic performance. A purely descriptive approach might suggest that reducing screen time directly improves academic outcomes. However, causal analysis may reveal confounding variables, such as socioeconomic status and learning habits, which enables researchers to propose more precise and effective recommendations.

Second, predictive models based solely on correlation are vulnerable to failure when underlying conditions change, whereas causal explanations enhance model applicability by identifying enduring causal relationships. A notable example is Google Flu Trends, once a celebrated achievement in big data analytics. Utilizing Google search data and machine learning algorithms, this model accurately predicted influenza trends in the US around 2009, with results comparable in accuracy to those of the Centers for Disease Control and Prevention (CDC). However, after 2011, the model systematically overestimated flu incidences, at times producing estimates twice as high as CDC-reported figures. The model’s failure drew scholarly scrutiny, but its “black box” nature left Google’s engineers unable to explain, anticipate, or fix the problem.

In many applied fields such as law, finance, and medicine, predictive models based on big data require not only high accuracy but also interpretability. Causal explanations help elucidate a model’s decision-making process, thereby increasing user trust. For example, in the medical field, studies have shown that many physicians hesitate to rely on AI-driven diagnostic predictions derived from medical big data. A key reason for this reluctance is the lack of transparency in the models’ decision-making mechanisms. If predictive models could clearly explain the reasoning behind their outputs, medical professionals would be far more likely to adopt them in clinical settings.

The lack of interpretability and transparency remains a critical challenge in the application and adoption of large-scale predictive models. Although prediction primarily relies on correlation, causal explanations are essential for ensuring that predictions are scientific, logical, and acceptable. Social science research utilizing big data should not disregard causality while emphasizing correlation. In fact, big data applications demand a stronger focus on causal reasoning. However, current mainstream big data methodologies are not yet fully equipped to address these requirements.

Enhancing causal inference through big data research

Big data research not only necessitates causal analysis but also enhances the credibility and reliability of causal inference by providing extensive data sources, enabling precise variable control, expanding methodological tools for casual inference, and supporting dynamic causal analysis.

First, big data encompasses a wide range of data types, often with high temporal resolution and broad spatial coverage. These diverse data sources enable researchers to capture complex causal relationships with greater granularity. For instance, e-commerce platforms such as Taobao and JD.com optimize their recommendation algorithms by incorporating causal inference models. These models draw on a range of user data—from textual sources like purchase histories, browsing behavior, and search queries to sensor-based interactions such as clicks and purchases.

Second, compared to traditional datasets, big data typically incorporates a wider range of variables and finer-grained information, which allows for more effective control of confounding variables and facilitates more precise causal identification. In the field of education, for example, researchers analyze students’ learning behavior data to assess the impact of different teaching strategies on academic performance. By controlling background characteristics such as socioeconomic status and learning habits, researchers can more accurately infer the causal effects of teaching strategies.

Third, big data research has introduced a broad array of tools and methodologies for causal inference, including techniques such as causal forests and double machine learning, which are particularly effective for handling high-dimensional data and nonlinear relationships. For example, in e-commerce research, analysts leverage transaction data from e-commerce platforms to examine the impact of promotional campaigns on sales performance. By employing double machine learning techniques, researchers can accurately estimate the causal effects of promotions while accounting for confounding variables such as seasonal fluctuations and market competition dynamics.

Fourth, big data frequently exhibits time-series characteristics, making it well-suited for capturing the dynamic relationships between variables. This is particularly valuable for examining the timing and lagged effects of causal relationships. In environmental science, for instance, researchers utilize meteorological sensor data and air quality monitoring records to investigate the dynamic causal relationship between atmospheric conditions and pollution levels. By analyzing time-series data on wind speed, humidity, and pollutant concentrations, researchers can identify causal pathways linking meteorological factors to air quality fluctuations.

Driving causal analysis paradigm shift

Beyond enhancing the reliability of causal inference, big data research challenges prevailing paradigms and has the potential to drive significant shifts in causal analysis across multiple dimensions.

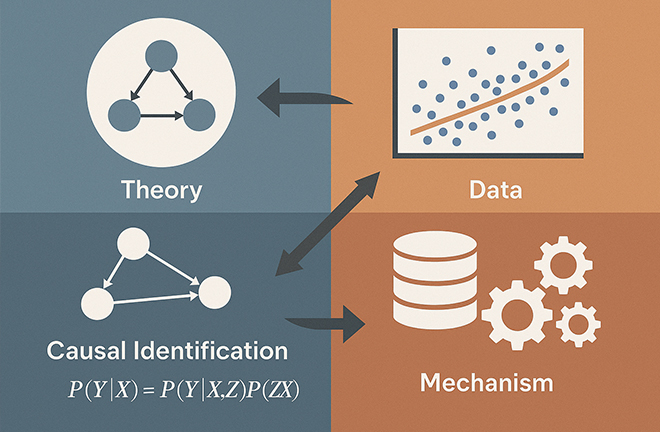

The first paradigm involves a transition from a “theory-driven” approach to a “theory- and data-driven framework.” Traditional causal analysis has been predominantly theory-driven, wherein researchers first propose hypotheses and subsequently test them using empirical data. However, the rise of big data has facilitated a data-driven research paradigm in which potential causal relationships are first identified through large-scale data mining, followed by theoretical interpretation. In recent years, scholars have introduced the “computational grounded theory,” emphasizing that in the era of big data, social scientists should be adept at identifying causal relationships from complex datasets and proposing causal theories. The computational grounded theory or the data-driven approach is likely to play an increasingly central role in shaping the future of causal analysis and merit further scholarly attention.

The second paradigm involves a shift from an emphasis on causal identification to a greater focus on mechanism explanation. Conventional causal analysis primarily seeks to identify the causal effect of an independent variable on a dependent variable using experimental or statistical techniques. This approach often lacks in-depth explanation of underlying mechanisms. In contrast, the era of big data has underscored the growing importance of mechanism-based explanations. For instance, predictive models based on big data often demonstrate high levels of accuracy but provide little insight into the causal processes driving their predictions. As a result, a key challenge for future causal analysis will be to develop methodologies to elucidate the “black box,” thereby improving the transparency and interpretability of big data-driven models.

The third is a shift from “tracing from cause to effect” to “tracing from effect to cause.” Traditional causal analysis has typically focused on estimating the average effect of a particular causal variable on an outcome variable. However, it has paid relatively little attention to how a given outcome arises from multiple causal factors. In the era of big data, predictive research has seen unprecedented advancements. Improving predictive accuracy requires moving beyond isolated cause-effect relationships. As a result, future causal analysis is likely to shift towards complex causal networks to systematically investigate the diverse factors contributing to specific outcomes.

In conclusion, the rise of big data does not diminish the importance of causal analysis; instead, it presents new opportunities to enhance traditional methodologies of causal inference. Causal reasoning remains central to our understanding of the world, and the notion that correlation outweighs causation is an oversimplified and misleading interpretation of big data analytics. In the big data era, there is a dual imperative: on one hand, to leverage data and algorithms for generating predictive insights that guide decision-making, and on the other, to mitigate the risks of data overreach and algorithmic determinism, and protect individual autonomy. Addressing these concerns demands a renewed focus on causal analysis. Far from becoming obsolete, causal analysis remains essential—and more critical than ever—in the age of big data.

Xu Qi is a professor from the School of Social and Behavioral Sciences at Nanjing University.

Edited by REN GUANHONG