Survey delineates pros and cons of AI in liberal arts research

Data from the survey Photo: Yu Jialing/CSST

To gain a more comprehensive understanding of how scholars in the humanities and social sciences perceive and apply large AI models, CSST recently invited hundreds of academics to participate in a survey and follow-up interviews on key issues. The 205 valid responses collected suggest that a quiet revolution may be underway—one in which large AI models are profoundly reshaping research paradigms, methodologies, and the role of scholars. While this transformation presents unprecedented opportunities, it also introduces new challenges.

Embracing technology

In his paper “How is it possible to construct a ‘literary theory without literature?,’” Chen Dingjia, a research fellow from the Institute of Literature at the Chinese Academy of Social Sciences (CASS), explored the purpose of literary theory in the contemporary era through a dialogue with AI. He found its responses highly satisfactory. “Over the past two years, I have been following AI developments and learning about the technology. Using AI for data collection and article revision has significantly improved my efficiency, saving me one to two hours each time,” Chen told CSST.

Chen’s experience is not unique. According to the survey, 64.87% of the respondents have already incorporated AI tools into their academic research. Among them, 75.61% primarily used AI for literature review. Additionally, 91.22% believed AI enhances research efficiency, while 90.24% reported that it improves data processing.

Yang Binyan, an associate research fellow at CASS’s Institute of Journalism and Communication, shared her perspective: “Faced with vast databases, AI serves as a tireless assistant, rapidly extracting key arguments, identifying areas of debate, and even uncovering new sources and research leads.”

With DeepSeek gaining widespread recognition, its advanced capabilities have made it the preferred model for 82.44% of the surveyed scholars—far outpacing foreign alternatives like ChatGPT. “DeepSeek has a richer and more accurate Chinese-language corpus,” noted a doctoral student from a university in Sichuan Province, who requested anonymity.

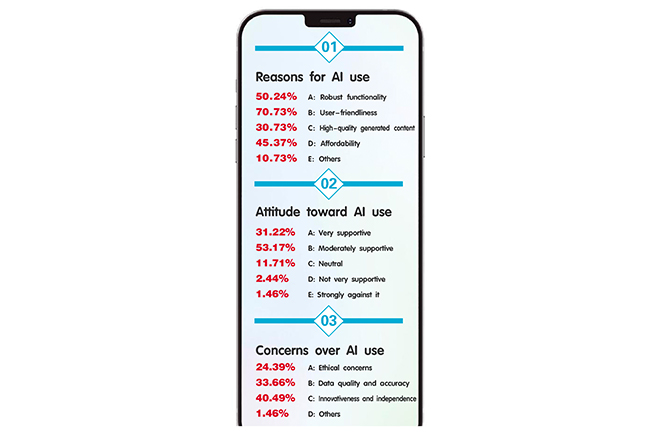

The rapid adoption of AI is largely driven by its ease of use. Among all the respondents, 70.73% cited “user-friendliness and simple operation” as their primary reason for using large AI models, particularly in research and content generation. Meanwhile, 50.24% valued AI’s robust functionality in meeting research needs, 45.37% highlighted its affordability, and 30.73% emphasized the high quality of its generated content.

In terms of the opportunities that large AI models bring to the development of the humanities and social sciences, respondents widely recognized their ability to enhance research efficiency and improve data processing. 71.71% of the survey participants found AI models inspiring for expanding research methods, while 62.93% believed they offered new research perspectives. The survey also revealed that 58.05% of the respondents were curious about the capabilities and future potential of these models, while 63.41% were contemplating their impact on human society, culture, and values. This indicates that interest in AI extends beyond its technical functions, encompassing its broader societal implications as well.

‘AI hallucinations’

After DeepSeek shot to fame, users began testing its capabilities with increasingly complex questions. Chen Xuguang, a professor of film and television studies from the School of Arts at Peking University, shared a typical experience: “I once asked AI to evaluate my theory of ‘film industry aesthetics.’ It quickly summarized the theory’s origins and development, which is helpful for beginners trying to understand this concept.” However, Chen Xuguang found that AI did not provide deeper insights into the theory, and the evaluation templates it used could easily be applied to other scholars and theories.

In interviews, scholars informed CSST that while AI excels in literature organization, retrieval, and synthesis, its results vary in quality and reliability, requiring meticulous manual verification. Survey data corroborates this challenge: 73.66% of the respondents cited “inaccurate generated content” as the most common issue they encountered when using large AI models.

With the rapid advancement of information technology, AI’s role in academic writing is expanding, which, however, has sparked increasing concerns among scholars. According to the survey, 80% of the respondents expressed fear that over-reliance on AI and the substitution of algorithmic thinking for human cognition could lead to complacent thinking and a decline in creativity.

When it comes to using large AI models, the innovation and independence of research are seen as paramount. Scholars contended that heavy reliance on large language models is not just a technical matter but an ethical one as well. Survey results show that 40.49% of the respondents emphasized that while AI can offer powerful analytical capabilities, ensuring the independence and originality of research should remain a top priority. Additionally, 33.66% expressed concern about data quality and accuracy, while ethical and moral considerations garnered less attention, with only 24.39% of the respondents voicing concern.

The challenges posed by AI and the need for ethical reflections are apparent. The training data used by AI models may harbor cultural biases, potentially distorting research outcomes. Moreover, the lack of transparency in decision-making processes within large models could undermine the interpretability of humanities research. AI-generated content may also obscure the ownership of academic achievements, requiring vigilance against technological abuse. Some scholars worried that AI could diminish the “human” aspects of humanities research, such as emotional expression in literature or the depth of philosophical dialectics.

‘Human-machine symbiosis’

Despite various concerns about AI, scholars are generally upbeat. 92.68% of the respondents believed large AI models have promising applications in humanities and social science research, with over 84% strongly supporting their use in these fields.

“Machines and humans have their respective strengths. We should neither abandon machines due to their shortcomings nor rely on them entirely because of their convenience, sacrificing human agency and creativity.” The majority of scholars interviewed held an open-minded attitude toward AI.

Through investigation, CSST found that China’s education sector has already taken proactive steps in deploying AI tools. In the 2024 graduation season, the School of Communication at East China Normal University (ECNU) and the School of Journalism and Communication at Beijing Normal University (BNU) jointly released the “Guidelines for the Use of Generative AI for Students,” which requires compliance with relevant regulations, academic ethics, annotation of AI use, examination of generated content when necessary, discipline-specific terms, etc. This marks the first official guideline from China’s higher education sector on the normative use of generative AI in education and academic research.

“AI usage among students is now quite common. Forcible restrictions are tantamount to ‘covering up the ears and stealing the bell,’” said Wang Feng, dean of the School of Communication at ECNU. “AI should be used under the premise of explicit use norms, and without violating the basic principles of scholarship.”

Moreover, Zhejiang University has launched a series of online open courses on DeepSeek, offering in-depth instructions on overcoming computing power limitations and exploring how humans can build a new civilizational pact to coexist with AI. The School of Information Management at Sun Yat-sen University has instituted a “Digital Humanities Workshop” to teach researchers how to cross-verify AI results, promote human-computer interaction, and avoid ethical risks. BNU has also released China’s first “large model-driven multimodal textual analysis system” for journalism and communication. Across the country, many colleges and universities are actively exploring new ways to integrate AI into scholarship, testing its potential across various academic fields.

AI’s limitations

“In your view, will AI eventually replace scholars? What is the future of the humanities and social sciences?” When posed with these questions, interviewees offered sharply differing opinions.

“Within a decade, AI will supersede more than 70% of liberal arts disciplines, including literature, philosophy, and translation. The way humans learn will undergo a profound transformation,” predicted Zhao Yongsheng, a research fellow from the Academy of Global Innovation and Governance at the University of International Business and Economics.

Yet survey data tells a more complex story. While over 90% of the respondents registered optimism about the prospects of AI applications, only 15.12% reported using it “frequently” every day, 24.88% said they “rarely used it,” and some avoided it altogether. One respondent, a history professor, admitted: “[At present,] I use AI to look up information, but will not hand over argumentation, the core part of research, to the machine. The soul of humanities research lies in critical thinking, while AI has no soul.”

“[Currently,] AI can’t produce the insights in From the Soil [renowned Chinese sociologist Fei Xiaotong’s magum opus], nor can it resolve the moral dilemma of the ‘trolley problem,’” said Yuan Li from the School of Philosophy at Renmin University of China. Compared to humans, AI excels at processing vast amounts of information, but it still lacks true cognition, emotional intelligence, and the ability to make value-based judgments. It can imitate, but its underlying logic depends on human wisdom. “True thought,” she emphasized, “is born from humanity’s relentless pursuit of meaning.”

Edited by CHEN MIRONG