Large language models present challenges for linguistics

The tensions between AI technology and traditional linguistic theory prompt critical reflections on the role of AI in future linguistic research. Photo: IC PHOTO

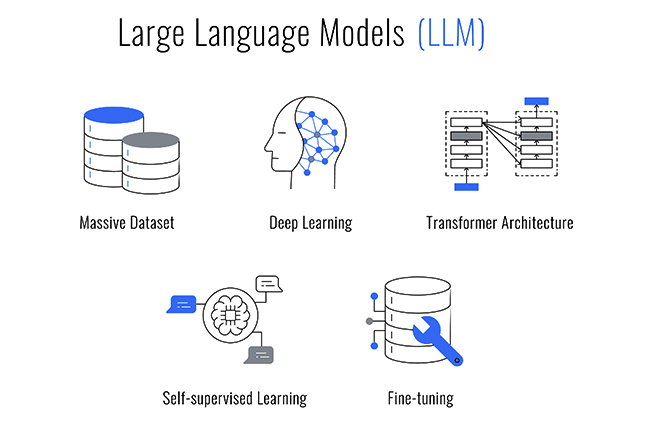

The rapid and iterative advancement of large language models (LLMs) has showcased remarkable capabilities in natural language processing, simultaneously presenting novel challenges to contemporary linguistic theory. In 2023, Noam Chomsky published an article in The New York Times entitled “The False Promise of ChatGPT,” wherein he contended that LLMs rely solely on pattern matching across extensive datasets, devoid of genuine linguistic understanding, and amount to a form of plagiarism aided by advanced technology. In response, Geoffrey Hinton offered a sharp rebuttal, arguing that these models possess significant potential for understanding and cognition. This intellectual dispute between the two preeminent scholars transcends technical debates, addressing fundamental questions about the nature of language and human cognition. It reflects the tensions between artificial intelligence (AI) technology and traditional linguistic theory, while prompting critical reflections on the role of AI in future linguistic research.

Innate mechanism vs data-driven

Chomsky is widely acknowledged as the most influential linguist of the 20th century and the founder of the theory of generative grammar. He posited that humans possess an innate linguistic capacity, arguing that a cognitive mechanism—referred to as the “language faculty”—is biologically determined and genetically inherited. According to Chomsky’s framework, this intrinsic linguistic ability enables individuals to comprehend and produce an infinite array of sentences. The language faculty, he suggested, is underpinned by specialized neural modules within the brain that facilitate the rapid derivation of complex grammatical rules and sentence structures, even with limited exposure to linguistic input. Chomsky’s theory has been further bolstered by the “poverty of the stimulus” argument, which posits that children are capable of generating syntactically complex sentences they have never encountered. This ability to produce novel linguistic constructions from limited exposure underscores the existence of an inborn language faculty, capable of guiding the acquisition of complex grammatical structures beyond the scope of available linguistic input.

Hinton adopts a markedly different stance on this issue, challenging Chomsky’s hypothesis as fundamentally misleading. He contends that language acquisition is not dependent on an innate universal grammar but is instead rooted in the progressive accumulation of environmental input and experiential learning—a quintessentially data-driven process. For instance, certain LLMs operate without reliance on innate structures or predefined linguistic rules. These models demonstrate the capacity to produce fluent and idiomatic natural language solely through exposure to extensive datasets. Their success provides compelling evidence that linguistic competence can emerge from substantial language input and iterative feedback mechanisms, rather than being innately determined by biological inheritance.

Universal Grammar vs vector representations

Central to Chomsky’s theory of generative grammar is the concept of “Universal Grammar,” which posits that all human languages are grounded in an inherent and universal set of rules. While surface-level features of languages may differ vastly, their underlying structures are governed by shared principles and constraints defined by Universal Grammar. A pivotal feature of Universal Grammar is recursion, the ability to nest grammatical rules to generate increasingly complex sentence structures. This recursive property enables humans to construct an infinite variety of sentences and convey a broad spectrum of ideas and viewpoints, even when working with a finite lexicon and set of grammatical rules.

In contrast to Chomsky’s perspective, Hinton emphasizes that LLMs do not rely on predefined, fixed rules but instead utilize vector representations within neural networks to generate language. In these networks, information is encoded as high-dimensional vectors that propagate through multiple layers, progressively capturing intricate patterns and features within the data. This mechanism enables the transformation of diverse inputs—such as language, images, or other forms of data—into vectors that can be processed through mathematical operations. Through training, the network learns to utilize these vectors to produce meaningful outputs, such as coherent natural language sentences or realistic images. The use of vector representations allows the model to discern data correlations and patterns within a high-dimensional space, forming the basis for its generative capabilities.

Imitation and assemblance vs inference and prediction

Chomsky argues that LLMs are incapable of genuine language understanding. From his perspective, LLMs merely mimic human linguistic behavior by performing statistical analyses on vast datasets, without grasping the underlying meanings of language. These models, he contends, operate by assembling fragments of existing data and fail to exhibit true comprehension. The linguistic capabilities displayed by LLMs represent only superficial imitation rather than authentic understanding. While these models can generate seemingly meaningful texts through pattern recognition and vocabulary associations derived from their training data, they lack semantic understanding and reasoning abilities. Chomsky asserts that genuine language competence involves reasoning, the construction of meaning, and the integration of contextual and background knowledge—core elements that LLMs demonstrably lack.

Hinton, on the other hand, contents that LLMs achieve a certain degree of language understanding through the mechanisms of neural networks. In these networks, linguistic symbols are encoded as high-dimensional vectors, with subsequent vectors generated through interactions across multiple layers of interconnected neurons. This process extends beyond mere text autocompletion, encompassing deep feature analysis and the prediction of relationships between data points. For Hinton, this means that the essence of understanding in such models lies in the transformation of symbols into vectors and the dynamic interactions of these vectors to predict subsequent symbols. Regarding the “hallucinations” often produced by LLMs, Hinton interprets them as instances of confabulation. Rather than deliberately “fabricating” information, LLMs generate outputs based on probabilistic predictions derived from existing features when faced with insufficient data or uncertainty. A parallel can be drawn between this phenomenon and certain aspects of human thought.

Implications for linguistics

The debate between Hinton and Chomsky highlights two fundamentally distinct paradigms of language understanding: one rooted in rules and structure, and the other grounded in data and algorithms. This divergence extends beyond academic discourse, reflecting deeper contrasts between the operational principles of human cognition and machine intelligence. The discussion offers significant insights for the future integration of linguistics and AI. Advancing our understanding of language requires seeking a synthesis between rule-based linguistic theories and data-driven learning approaches. By combining linguistic principles with advancements in deep learning, it may be possible to develop models with enhanced explanatory power.

While LLMs currently function as “black boxes” with limited interpretability, their remarkable success in natural language processing—particularly their capacity for “emergence”—has expanded the scope of linguistic inquiry and demonstrated substantial scientific value.

In this regard, future researchers should adopt a rational and cautious perspective, avoiding both the overestimation of LLMs’ capacity for genuine understanding and the underestimation of their potential for processing complex data and generating language. LLMs offer not only a novel experimental platform for advancing linguistic research but also an opportunity to reconsider the intricate relationships between language, cognition, and intelligence within a broader conceptual framework. The ongoing development of these models has the potential to serve as a critical catalyst for linguistic theory, guiding the academic community toward a deeper comprehension of language generation mechanism and the cognitive processes that support it.

Wang Junsong is an associate professor from the School of Foreign Studies at Northwestern Polytechnical University.

Edited by REN GUANHONG