Responsibility gap and governance of AI social experiments

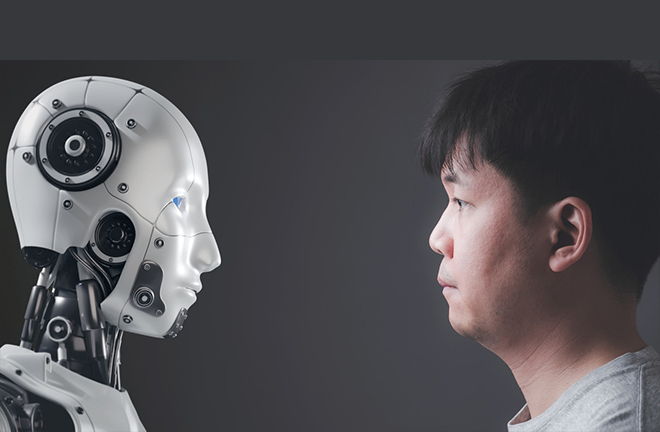

Human agents should be the ultimate decision-makers for key actions of autonomous systems and bear both moral and legal responsibility. Photo: TUCHONG

AI social experiments have now become a supporting path for China to assess and mitigate AI’s potential social impact. The “scenario-driven innovation” provides a policy framework for the implementation of these experiments, guiding the construction of specific scenarios. However, this also implies that the “responsibility gap” haunting AI applications may first emerge in pilot scenarios, posing challenges to the ethical governance of AI social experiments.

The application of AI technology to respond to diverse social needs must be guided by real-world scenarios. Scenarios can be understood as the combination of embedding fields, which are defined spatiotemporal frameworks, and demand situations, which constitute the content of these frameworks, including behaviors, elements, and relationships. In the digital economy era, AI provides technical support for meeting situational needs characterized by iteration, fragmentation, embeddedness, and real-virtual interdependence.

Disorder arises in the process of scenario iteration and the fusion of different scenarios, due to four salient technical attributes triggered by the interaction between AI and scenarios: learning capability, opacity (or black-box), impact on various stakeholders (including those who do not use AI systems), and autonomous or semi-autonomous decision-making.

The lack of a regulatory framework for human-machine interaction threatens the consistency of societal moral frameworks and undermines the legal foundations of responsibility, resulting in a “responsibility gap.” Since these challenges seldom fully manifest in traditional laboratory settings, real-world scenarios must be introduced into the experimental stage of AI, giving rise to AI social experiments.

Unlike natural science experiments centered on “natural objects” and conducted under complete human control, AI social experiments focus on “scenarios,” which here refer to sociotechnical systems integrating “hard infrastructure” (artifacts) with “soft environments” (social norms). Experimenters, legislators, and all participants—including the public, users, and even bystanders—are “social experimenters,” with no absolute distinction between subject and object. Therefore, the responsibility gap in human-machine interaction scenarios also represents an ethical challenge in AI social experiments.

The normative foundations of sociotechnical systems hold that human agents should be the ultimate decision-makers for key actions of autonomous systems and bear both moral and legal responsibility. In recent years, the international community has actively advocated for and continued to improve “meaningful human control” (MHC), a framework for AI ethics and governance that can offer new perspectives to address the responsibility gap in AI social experiments.

The core philosophy of MHC is that humans retain ultimate control over intelligent machines and assume moral responsibility for their actions. Drawing from the philosophical inquiry into the relationship between guidance control, free will, and moral responsibility by American scholars John Martin Fischer and Mark Ravizza, as well as the value-sensitive design approach, Dutch scholars Filippo Santoni de Sio and Giulio Mecacci and Jeroen van den Hoven propose that MHC-compliant intelligent systems should adhere to the dual ethical design principle of “tracking-tracing.”

“Tracking” aims to ensure the substantive relationship between human agents and intelligent systems, that is, the behavior of human-machine systems responds to human motivations in real-world scenarios. “Tracing” sets a temporal threshold for accountability, meaning that the behavior, capabilities, and potential social impact of human-machine systems can be traced back to proper technical and moral understanding that originates in at least one system design or human agent interacting with the system.

MHC-compliant intelligent systems are sociotechnical systems, akin to AI social experiments in terms of the scenarios and objects. MHC-guided comprehensive governance of the responsibility gap in AI social experiments necessitates the adherence to the dual principle of “tracking-tracing” in order to enhance the effectiveness of diverse forms of responsibility. Overall, MHC requires that humans and institutions, rather than computers and algorithms, control and shoulder moral responsibility for the key decisions made by advanced intelligent systems. AI social experiments are thus equipped with value orientation, a guiding framework, and high standards.

Yu Ding is a lecturer from the School of Marxism at Zhejiang University. Li Zhengfeng is a professor from the School of Social Sciences at Tsinghua University.

Edited by WANG YOURAN