Navigating the ethical landscape of medical AI

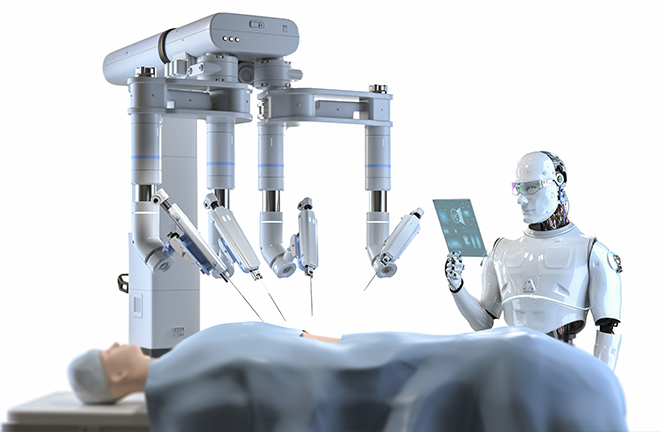

Potential deployment of AI in the operating room Photo: TUCHONG

As the advances in medical AI technology and the increase in commercial investment have paved the way for an intelligent transformation in the healthcare sector, the ethical implications of medical artificial intelligence are becoming an area of growing concern for academia, industry, and public health authorities. In the 30 years of its development, research on the ethics of medical AI has progressed through three distinct stages.

The initial phase of exploration focused on three key areas. Firstly, it aimed to integrate ethics into the methodology of intelligent healthcare. Secondly, it sought to explore solutions to ethical issues related to medical AI by analyzing technical properties and application cases. Lastly, it endeavored to establish a knowledge management framework that underpins ethical decision-making by integrating the ethical evaluation of medical AI with clinical practice and the social environment.

In the period of advancement, as the medical AI market was booming, research on the ethics of medical AI placed greater emphasis on practice-oriented risk analysis and ethical issues in different medical scenarios.

In the period of accelerated development, research on the ethics of medical AI is conducted along three dimensions. The first concerns fundamental ethical issues such as algorithmic bias in machine learning, potential emotional disorders arising from human-machine interaction, and ethical risks associated with the use of AI in mental health. The second dimension involves the construction of ethical governance systems for medical AI. Theoretical frameworks such as stakeholder analysis and responsible innovation have been introduced to emphasize parallel development of technological innovation and ethical norms. The third dimension pertains to value judgment and epistemological foundations of the ethics of medical AI, focusing on the reliability, interpretability, and transparency of the process.

Today, the ethics of medical AI have developed from a secondary issue of technology research into a specialized field. Since the context in which technology is developed and applied varies considerably across sub-fields of medical AI, the ethics of medical AI can be categorized as general ethics or specific ethics.

General ethics is centered on universal ethical quandaries such as risk control in data-based predictions, data platform permissions, and equitable allocation of intelligent medical resources. Future studies should clarify whether general ethical issues are triggered by technical inadequacy or the passive alienation of technology in the social environment, as well as the respective role of technology itself and the external environment.

Specific ethics entails analyzing ethical issues in particular contexts of medical practice. For instance, considering the complexity of human health, it is challenging to ensure the accuracy of health predictions offered by smart wearable devices. The subjectivity of physicians could be dissolved by algorithms once intelligent technology is applied in diagnostics and treatment as well as hospital management. Attribution of liability may become trickier, with less paternalistic diagnosis and treatment and increased autonomy of patients/consumers.

The academic community has proposed a set of ethical guidelines for medical AI, which are largely derived from traditional medical ethics. However, in view of the changes in subjects of medical practice, modes of interaction, and forms of medical information, it is necessary to re-examine the applicability, reliability, and adequacy of traditional principles of medical ethics.

Future research on the ethics of medical AI needs to design ethical guidelines for the implementation of technology that are applicable to particular medical scenarios, while also addressing overall ethical quandaries such as the formation of moral agency in intelligent healthcare. Such endeavors will not only advance research on AI ethics, but also accelerate the innovative development of ethics as a discipline.

The ethics of medical AI constitute an essential support for intelligent healthcare and important theoretical foundations for laws and policies regarding medical AI, thus necessitating joint efforts of ethicists, AI experts, technology companies, government agencies, and other stakeholders. The synergy between ethical guidelines and governance systems not only ensures that medical AI is technically reliable and socially viable, but also provides an institutional safeguard to prevent the deviation of medical AI from its intended purpose. Relevant interdisciplinary issues need to be further studied.

It is necessary to continuously adjust and improve the guiding principles and regulatory systems in a series of pilot programs to ensure the effectiveness of ethical review and guidelines for medical AI. Effectiveness should be defined based on the evaluation of social consequences and the emotional feedback of patients/consumers. The construction of governance systems for medical AI requires establishing “top-down” regulatory frameworks and accommodating the “bottom-up” ethical demands of various stakeholders. Psychology has the potential to facilitate future research on the ethics of medical AI, as it can provide empirical insights into ethical evaluation of medical AI from the perspective of patients/consumers in particular medical scenarios.

Wang Chen and Xu Fei (professor) are from the Department of Philosophy of Science and Technology at the University of Science and Technology of China (USTC). Sun Qigui (associate professor) is from the School of Public Affairs at USTC.

Edited by WANG YOURAN