Text mining boosts knowledge system construction

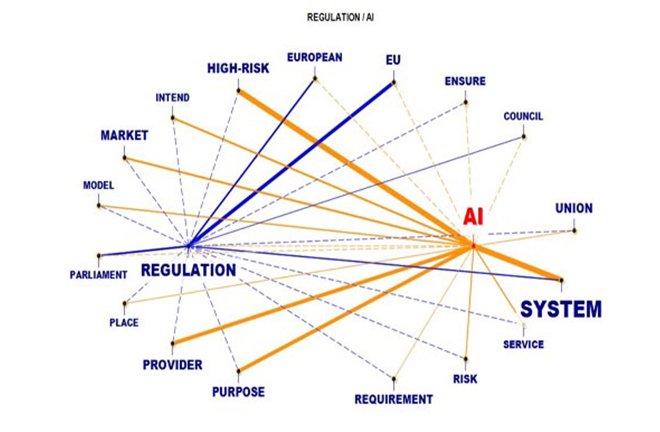

FILE PHOTO: Co-occurrence analysis

With the arrival of the digital age, knowledge is expanding, intersecting, and spreading at an unprecedented pace. The widespread adoption of the internet and the improvement of academic databases have created an immense repository of textual data for knowledge discovery. Academic literature, professional books, research reports, and course materials in electronic form are now abundant and easily accessible. Traditional methods of organizing knowledge—reading and summarizing texts manually—are increasingly inadequate when faced with such volume. They are prone to sampling bias and may overlook key information due to the subjectivity of human judgment. Tasks such as extracting discipline-specific concepts, classifying ideas, constructing relational networks, and analyzing paradigm shifts now call for more precise, efficient, and intelligent methods.

Text mining, also known as text data mining, refers to the process of extracting high-value, latent information from large volumes of unstructured or semi-structured text—information that conventional methods cannot readily obtain. This technology plays a crucial role in building concept, theory, method, and application systems. Conceptual systems aim to map out core disciplinary concepts and their interconnections. Theoretical systems focus on integrating and refining theoretical knowledge. Methodological systems seek to optimize and innovate research techniques. Application systems emphasize putting academic knowledge into practice. In navigating the complexities of the digital era, text mining emerges as a vital tool for advancing the construction of comprehensive knowledge systems.

Technical foundation

Text mining combines natural language processing (NLP), machine learning, statistics, and data mining. Core tasks include text preprocessing, feature extraction, classification, clustering, sentiment analysis, entity recognition, relationship extraction, and topic modeling.

For the purpose of knowledge system construction, key techniques include feature engineering, dimensionality reduction, semantic networks, topic modeling, and time series analysis. Used in concert, these tools can extract core concepts from academic literature, establish conceptual relationships, support theoretical development, and facilitate multi-level analysis of research methods and subjects—at the macro, meso, and micro levels—while tracking the trajectory of academic inquiry.

Feature engineering plays a foundational role in identifying core academic concepts. It includes techniques such as the bag-of-words model, term frequency–inverse document frequency (TF-IDF), topic modeling, and word embeddings. The bag-of-words model treats a text as a collection of words and identifies key terms by analyzing frequency. TF-IDF weighs both the frequency of terms within a document and their rarity across documents to highlight key concepts. Topic models like Latent Dirichlet Allocation (LDA) uncover underlying themes through word co-occurrence patterns. Word embeddings, such as Word2Vec and GloVe, map words into low-dimensional vector spaces, allowing for the clustering of semantically similar terms to identify conceptual groupings.

Dimensionality reduction is necessary due to the high complexity of textual data. Techniques such as correspondence analysis and t-distributed stochastic neighbor embedding (t-SNE) project high-dimensional data into lower dimensions, making patterns and relationships easier to detect. Correspondence analysis processes word-frequency matrices, computes silhouette coefficients, and visualizes the associations between terms and documents, helping to scaffold a knowledge system. T-SNE clusters data points in reduced-dimensional space; the resulting groupings and their spatial relationships clarify key topics, their interconnections, and hierarchical structures, offering a foundation for organizing knowledge.

Topic modeling includes techniques such as Latent Semantic Analysis, LDA, Dynamic Topic Models, Structural Topic Models, and Biterm Topic Models, which are particularly well-suited to large-scale text analysis. Implementation involves preprocessing, selecting appropriate topic numbers and algorithms, and then interpreting and labeling the extracted topics in line with academic standards. Topic relationships are determined by analyzing similarity and co-occurrence frequencies, which helps clarify their logical connections. The final step involves distilling key knowledge elements to form structured knowledge units, thereby supporting the construction of a cohesive knowledge system framework.

Semantic networks play a critical role in revealing knowledge structures. They encompass knowledge representation, association mining, structural analysis, inference, and visualization. In knowledge representation and modeling, academic terms and concepts are abstracted as nodes, and their relationships as edges, forming a network. Co-occurrence analysis and semantic similarity measures are used to uncover hidden associations. Structural analysis employs metrics like node centrality and community detection to assess the importance of concepts and define subfields. Inference techniques trace indirect connections and potential knowledge by searching network paths, providing support for academic research.

Time series analysis treats academic data as sequences that change over time, enabling the identification of patterns, trends, and models. By extracting time-related features, plotting trends, applying spectral analysis, and examining sequence correlations, researchers can track knowledge development and forecast emerging research directions.

Application prospects

To date, text mining has already yielded progress in the construction of knowledge systems, particularly in three areas: bibliometric research; the derivation and tracking of academic concepts; and the development and application of new tools in ontology engineering.

Bibliometric research applies quantitative methods to analyze academic literature, citation networks, and keyword co-occurrence, helping to reveal the internal logic and structure of scholarly development. Citation analysis, for instance, can trace the evolution of core literature, identify key scholars and institutions, and provide empirical support for the formation of disciplinary systems. High frequency and emergent keyword analysis helps capture research frontiers and trending topics, offering guidance for the dynamic updating of knowledge systems. Analyses of international collaboration networks also shed light on academic globalization and foster interdisciplinary integration and innovation.

The derivation and tracking of academic concepts is particularly valuable in the humanities and social sciences, where topic modeling can help analyze historical texts to identify core issues and shifts in thought. For example, Arjuna Tuzzi has used correspondence analysis and topic analysis to examine the historical development of academic literature; Giuseppe Giordan and colleagues have applied topic models to abstracts from leading American sociology journals to trace the discipline’s evolution; and Wang Shunyu and Chen Ruizhe have used structural topic models to analyze the abstracts of papers related to the Belt and Road Initiative, highlighting regional differences in scholarly focus. Text mining has also been applied to terminology standardization and academic influence assessment, offering technical support for the normalization and continual refinement of knowledge systems.

Finally, the development and application of new tools in ontology engineering has greatly enhanced the systematization and intelligent construction of knowledge systems. These tools automatically extract key concepts, terms, and their semantic relationships to build structured knowledge networks that expose the internal logic and evolution of disciplinary knowledge. In computer science, platforms such as Protégé have been used to develop the Web Ontology Language (OWL), providing a standardized framework for knowledge representation and reasoning in artificial intelligence. In the social sciences, semantic analysis tools are used to mine policy texts and construct knowledge graphs, supplying a scientific basis for policymaking and evaluation. These tools address persistent challenges such as vague concepts and unclear relationships, and—through dynamic updating and cross-domain integration—help drive the ongoing evolution and innovation of knowledge systems.

Nevertheless, building knowledge systems through text mining still presents challenges. First, natural language is inherently complex, marked by ambiguity, polysemy, metaphor, and flexible syntax, all of which complicate the identification and extraction of key concepts. Second, text quality also varies, with common issues such as misspellings, grammatical errors, and inconsistent abbreviations. Noise from irrelevant or redundant content, including advertisements, can further hinder accurate concept extraction and raise processing costs. Third, some disciplines are highly specialized, with unique terminologies, while others—especially emerging interdisciplinary fields—lack standardized conceptual boundaries. These conditions require researchers to possess deep domain knowledge and analytical precision, making knowledge extraction more demanding. Finally, many semantic relationships—such as causality, hyponymy or coordination—are implicit and require advanced analysis and reasoning to be accurately captured and represented in semantic networks.

Text mining presents new opportunities for building knowledge systems and offers significant academic value. By automating the processing of vast academic corpora, it enables efficient extraction of key concepts, terms, and their semantic relationships, supporting the systematization and structuring of disciplinary knowledge. It can also detect research frontiers and emerging trends, revealing the inner dynamics of knowledge evolution and informing strategic planning. Furthermore, text mining fosters interdisciplinary integration and innovation, offering methodological tools for the growth of new, hybrid fields. Leveraging these capabilities will advance the refinement and expansion of knowledge systems and support the development of an independent knowledge system.

Wang Shunyu is a professor from the School of Foreign Languages at Xijing University.

Edited by ZHAO YUAN

PRINT

PRINT CLOSE

CLOSE