AI’s impacts on cultural security and countermeasures

The analogy of technological advancement as a double-edged sword is particularly fitting in the AI era. Photo: TUCHONG

Amidst the current sci-tech revolution and the new wave of industrial transformation, artificial intelligence (AI) technology has emerged as a core force driving a fundamental shift in global civilization. Relying on cutting-edge technologies such as mobile internet, big data, supercomputing power, sensor networks and neuroscience, AI has demonstrated remarkable competencies in areas such as deep learning, cross-border integration, human-machine collaboration, collective intelligence openness, and autonomous control. These attributes are profoundly influencing national cultural security, governance system transformation, and changes in the international landscape. In this period of change, it is crucial to thoroughly understand the opportunities and challenges that AI presents to China’s cultural security and actively explore new governance models so as to contribute innovative ideas to modernize China’s system and capacity for governance.

Enhancing governance effectiveness

With the advent of the AI era and the continuous progress of industrial innovation, China’s cultural security governance is facing unprecedented development opportunities. Data-driven intelligent analysis capabilities make AI an effective tool for in-depth analysis and mining of massive datasets, offering extensive data support for cultural security governance. In particular, the latest generation of AI technology integrates various types of information, leveraging big data models and powerful computing capabilities to process and analyze data in real time, producing and disseminating results quickly. This efficient and timely information processing significantly enhances the convenience of cultural communication and the timeliness of cultural security governance.

In addition, the new generation of AI technology comprehensively uses and integrates multi-source data such as language, text, images and videos, providing robust data support for cultural security decision-making. Utilizing advanced techniques such as data mining, machine learning, and natural language processing, AI can meticulously classify, label, summarize, and reason through data related to cultural security. This extraction of valuable information from vast datasets helps decision-makers better understand the current situation and trends in cultural security, enabling them to formulate science-based and pragmatic solutions.

AI can also be used for real-time monitoring and risk identification concerning cultural security, effectively improving the foresight and accuracy of cultural security governance. By deploying advanced algorithms and machine learning models, AI systems can continuously collect information from the internet, social media, and other data sources to analyze trends and patterns in cultural communication. These real-time monitoring capabilities enable AI to detect potentially threatening content or behaviors early on. For example, AI can quickly identify extremist propaganda, the spread of disinformation, or inappropriate remarks targeting specific cultural groups, online. Once detected, AI can immediately alert regulatory authorities or relevant institutions, facilitating faster and more accurate response measures.

Cultural security facing risks

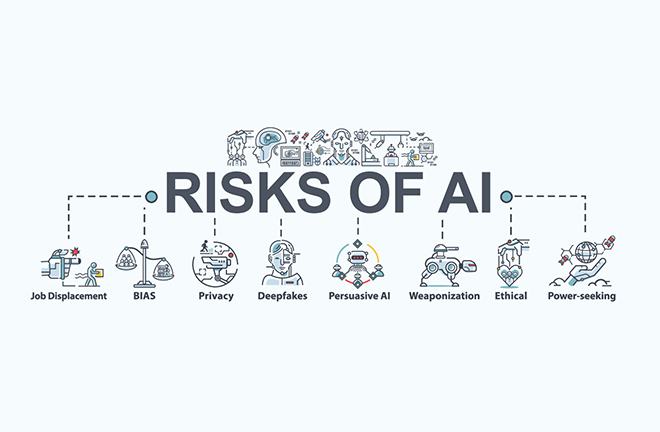

The analogy of technological advancement as a double-edged sword is particularly fitting in the AI era. Algorithmic power based on AI represents a new power structure, and platforms based on these algorithms are gradually accumulating new, unilateral influence. This algorithm-driven power is rapidly expanding due to the complexity and sophistication of algorithmic technology, coupled with insufficient authorization and supervision by public authorities, and lagging algorithmic governance methods. This phenomenon presents multiple risks and challenges to cultural security governance.

“Deepfake” technology represents a significant challenge arising from the development of AI. This technology employs AI algorithms to generate highly realistic false images, audio, and video content, thereby enhancing the stealth and deceitfulness of misinformation. By imitating the speech and behavior of celebrities or authoritative figures, deepfake technology can not only damage their reputations but also fabricate events or situations that have never happened, leading to public misunderstandings and potential issues like defamation, fraud, and social panic. In addition, it can act as a catalyst for the generation and spread of fake news, undermining public trust in the media and manipulating public opinion.

In recent years, news stories created using deepfake technology have increased, blurring the line between false content and realities and negatively impacting public opinion to varying degrees. AI-driven deepfake technology provides spreaders of misinformation with more precise means of attack by accurately identifying and targeting the psychological weaknesses of social media users in target countries.

Another serious problem is that AI may exacerbate the “information cocoon” effect and perpetuate cultural prejudice. The so-called information cocoon describes a scenario where individuals selectively access and consume information online, thus narrowing their exposure to diverse perspectives. AI-driven recommendation systems, which use data to recommend personalized content or items to users, can amplify this effect by reinforcing users’ existing views and filtering out conflicting information.

This phenomenon gives rise to several problems. First, it fosters cultural seclusion, where individuals within an information cocoon lack exposure to diverse viewpoints and are more susceptible to prejudice and misunderstandings. It also readily deepens cultural misunderstandings and stereotypes. As the information within the cocoon becomes more homogenous, people’s mindsets and attitudes may also become more closed and singular. This limits opportunities for engaging with diverse viewpoints, thereby reinforcing incorrect perception and biases against certain groups or things. Moreover, the information cocoon can lead to polarized views among different groups. In such environments, individuals or groups are prone to adopting extreme views and may exhibit reduced critical thinking. This makes it challenging for disparate groups to reach consensus and mutual understanding, potentially contributing to societal divisions.

“Technology manipulation” and ideological issues are also risks that cannot be ignored. While algorithms are designed for automatic learning and decision-making, they are not inherently “technologically neutral.” The goals set by users and operators influence their effectiveness. If algorithms are used, intentionally or unintentionally, to promote a certain ideology, they may manipulate public opinion and trigger ideological risks at multiple levels. First, the large-scale flow, aggregation, and analysis of AI corpora may lead to unprecedented data security risks. These risks encompass the entire data lifecycle, including input, computation, storage, and output. They may also threaten data sovereignty and network security, raising issues with individual privacy protection and even endangering national cultural security.

Strengthening institutional construction

First, it is necessary to plan and guide AI’s development and improve laws and regulations. A forward-looking vision and strategic layout are necessary to support the use of AI technology to mitigate AI risks, and actively promote the development and application of technologies related to AI governance. Meanwhile, it is essential to adhere to agile and multi-dimensional governance principles to build an AI governance system involving multiple stakeholders. Enhancing laws, policies, and industry standards, particularly focusing on the safe application of AI in key areas, is critical.

It is also important to protect individual privacy and data security throughout both the research and development process and the application of AI to ensure it is safe, reliable, and controllable. It is further advisable to strengthen the formulation and implementation of ethical norms for AI technology and ensure moral standards and social recognition within the cultural industry in order to align AI development with social values and human dignity.

Second, it is crucial to strengthen supervision and regulation and resolutely crack down on crime. A special supervision organization and management system can be established to evaluate the use of AI technology which should include comprehensive dynamic reviews and oversight of the training data, design processes, and outputs of AI algorithms to ensure their transparency and fairness. Ensuring that AI applications in the cultural field comply with legal norms is critical to preventing the misuse of technology for spreading harmful information or infringing on others’ legitimate rights and interests.

Third, it is advisable to leverage the core advantages of AI and make cultural security governance more intelligent, refined, and efficient. AI technology can be utilized to inject new vitality into the traditional cultural industry and promote the digital preservation and inheritance of traditional culture. In addition, machine learning and data mining technologies can be used to conduct in-depth analyses of traditional cultural resources such as literary works, musical pieces, and dances to enhance people’s understanding and appreciation of traditional culture. Furthermore, AI technology can be used to build a national cultural security database to systematically collect, organize, and analyze relevant data, enabling intelligent prediction and prevention of potential risks to national cultural security. In addition, AI can assist with in-depth analysis of monitoring data and feedback information concerning cultural security, making it possible to evaluate the implementation effectiveness of cultural security policies and provide important reference for the formulation and optimization of government policies.

Fourth, it is suggested to strengthen talent cultivation to provide intellectual support for AI development. Enhancing education and training in AI technology is essential. This will involve reforming the education system to incorporate AI-related courses into standard school curricula. Such courses could help students develop a foundational understanding of AI, along with practical skills, ultimately cultivating a cohort of young talent equipped with both theoretical knowledge and practical abilities in the field. Promoting vocational skill training and establishing a lifelong learning system is also recommended. Providing opportunities of professional training and further studies for in-service personnel will enable them to upgrade their knowledge and skills in line with the expanding applications of AI technology. Interdisciplinary cooperation should also be prioritized, and mutual cooperation and communication across different fields of knowledge should be encouraged so as to cultivate comprehensive talent with multidisciplinary backgrounds. For example, experts in different fields, such as computer science, mathematics, and psychology can collaborate to explore the progress of AI technology.

Fifth, it is vital to promote global governance and jointly respond to challenges. Efforts are needed to strengthen information sharing, experience exchange, and cooperation mechanism construction, jointly study and formulate relevant policy norms, and form consensus and cooperation frameworks. Dialogue and cooperation among countries can be promoted through such platforms as international organizations, forums, and summits. International regulatory organizations and review processes can be launched to jointly formulate application standards for AI in the cultural industry and clarify its legal and compliant scope and boundaries to ensure that AI applications comply with not only domestic laws and regulations, but also international norms. It is advisable to strengthen technical cooperation and innovation and jointly develop advanced AI technologies through research projects, technology transfer, and talent exchange. In addition, it is important to supervise, detect, and prevent criminal acts in the cultural field, increase intellectual property protection, and avoid the misuse and abuse of AI technology.

Xia Chunhui is a research fellow at the Center for Cold War and Contemporary International Relations under the School of Politics and Law at Northeast Normal University.

Edited by REN GUANHONG

PRINT

PRINT CLOSE

CLOSE