Generative artificial intelligence: addressing regulatory and patent law challenges

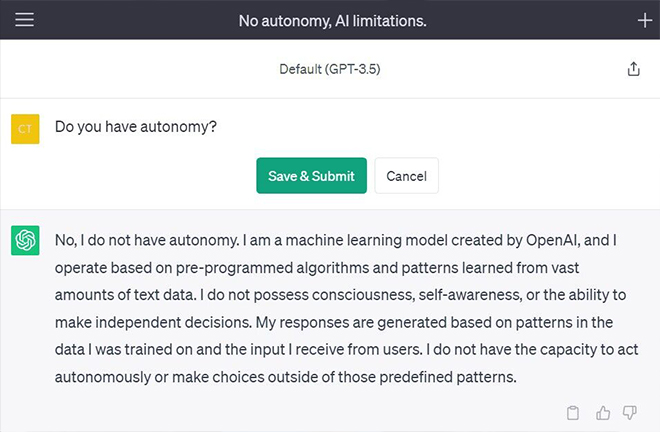

FILE PHOTO: ChatGPT’s explanation regarding its autonomy

When it comes to emerging technologies that have the potential to significantly impact various aspects of life, policy and legislative decision-making often lags behind. ChatGPT 4 was introduced a few months after the release of ChatGPT 3.5, but we are still far from comprehending the implications of this technology in its earlier versions, as well as large language models (LLMs) in general, for societal interactions, creative writing, linguistic diversity, and more.

Challenges for public policies

Some may argue that ground-breaking technologies render the existing regulatory framework obsolete or inadequate in some way. However, this conclusion can only be reached based on a “fit-for-purpose” assessment by benchmarking the existing regulatory and legal framework against the policy goals in the given context. In principle, the regulation of technology is about minimising technological risks while maximising technological benefits for society. To achieve this, policymakers first need to have adequate knowledge about what such risks and benefits are. Such assessment is, by definition, probabilistic in the case of emerging technologies. No technology is absolutely safe, even if tested in extensive trials (think about novel drugs). In the end, it is about balancing technological risks and benefits.

Finding such balance requires time and inclusive discussions. Furthermore, policymakers and legislators would need to come to a common understanding of the social benefits and risks at stake, the regulatory challenges that generative AI applications pose, the respective policy objectives and the appropriate policy measures. Finding agreement on such issues would be very challenging. I could refer at this point to the example of the legislative procedure in the European Union (EU) towards the EU AI Act. We can observe how much time it takes to find a consensus on how to implement the vision of safe and trustworthy AI, what should be an optimal level of safety regulations, how to deal with the general-purpose-technology nature of AI, and so on. Developments in the field of generative AI continue and will not wait till society reaches a consensus on what to make out of them.

On 29 March 2023, Elon Musk and a group of experts called for an immediate halt to the development of AI and over 1,000 people signed a letter highlighting the potential dangers of AI systems, including their impact on society and the potential for economic and political disruption. We will see what comes out of this initiative. Applications such as LLMs pose wide-ranging implications. Their use will affect any area of life where we use language – that is practically everything. Given the ubiquitous nature of AI applications, trying to prohibit them might be as futile as trying to catch the wind in a net. More likely, society including policymakers and regulators will need to figure out things “on the go.”

The question of whether legislators should formulate or amend laws to cope with new situations requires a comprehensive assessment specific to the field of law. Given that machine-learning applications such as ChatGPT have a “general-purpose technology” character, there is no exhaustive catalogue of uses that might need to be regulated, which makes the task of designing a regulatory framework highly challenging. For instance, in IP law, generative AI raises a host of questions from access to IP-protected inputs for the purposes of developing AI systems, to the protectability of machine learning models, to the protectability of AI output. When it comes to the usage of AI-generated texts, its lawfulness can be subject to different fields of law beyond copyright law, such as regulations concerning transparency, misinformation and defamation. Whichever area of law or regulation is concerned, I think there will be a need for specific rules on transparency related to AI applications. Such rules should allow one to understand what role AI has played in a particular situation for the purposes of defining and assessing the legal consequences.

Overall, I believe that the development of policies and legislation – as well as the broader societal understanding of AI applications such as ChatGPT – is likely to remain an ongoing process, given the dynamic nature of technological developments in this field.

Challenges for patent law

First, we need to define what we mean by “AI-induced” or “AI-generated” inventions. Current AI applications, impressive as they are, are tools that can be used in inventing activities. In the context of European patent law, an invention is understood as a technical solution to a technical problem. Machine learning is based on mathematical optimisation, which might explain its broad applicability to solving technical and engineering problems. A famous example is where NASA researchers developed a space antenna by using a genetic algorithm. There are also numerous possibilities to apply machine learning in drug discovery and development, for instance, for identifying and optimising structure-activity correlations of molecules.

As long as AI is used as a tool, the existing rules on determining inventorship and inventive step (non-obviousness) still apply.

In most legal systems, inventorship explicitly requires or implicitly presumes the creative or intelligent conception of the invention, or contribution thereto. In order to fulfil this requirement, participation in the invention conception phase should go beyond suggesting abstract ideas on the one hand, and simply carrying out ideas provided by others on the other hand. It should be made on an intelligent and creative level rather than a financial, material or mere administrative level.

A conceptualisation of an invention involves abstract thinking. Although machine learning systems or computational modelling, in general, can imitate cognitive processes, their performance still depends on human thinking and decision-making in the design and application of such computational techniques. In order to apply computational modelling to solve technical and engineering problems, one needs to set up the computational process. For that, one needs to have a good grasp of a problem and an in-depth understanding of the relevant working assumptions, underlying mathematical structures, potential limitations and pitfalls, etc. Thus, I do not think that we have approached the point in time where a human can simply “outsource” invention to generative AI. In situations where AI is used in the inventive process, it appears appropriate to refer to the decision-making regarding how to apply an AI technique to a technical problem at hand as a proxy for the “intelligent engagement” in invention conception. Who such decision-maker or decision-makers can be, would depend on the individual circumstances of a case.

Undoubtedly, some technical inventions are easier to develop than others. Besides, inventors may use different problem-solving or research tools, such as genomic or mathematical optimisation techniques. This is where the requirement of inventive step, or non-obviousness of an invention, plays a crucial role. In principle, only those inventions that surpass what an “average” professional in the relevant field would have achieved if confronted with a technical problem at hand should be deemed non-obvious and, hence, patentable. Some commentators have argued that humans are playing a too trivial role in the age of generative AI, or that inventive step now needs to be assessed relative to an “average” AI system instead of a person skilled in the art. However, I think AI algorithms and systems are still applied as tools and, hence, the concept of a person skilled in the art as a proxy for “obviousness” of an invention is still relevant. I also do not think that AI systems render any problem solution obvious as the application of AI to complex problem-solving can require complex decision-making on the part of the designers and users of AI systems. While the substantive rules on determining an inventor and the non-obviousness of an invention still hold in the context of AI-induced innovation, their practical application might become challenging and requires a more detailed analysis.

The need to understand technology before revolutionizing patent law

Most importantly, we need to be aware of and clarify those assumptions that suggest the presence of a “standalone” agency in computational systems. First and foremost, it is the tendency to refer to AI systems as autonomous and to depict them in an anthropomorphic way. In particular, AI systems are often endowed with the capacity to make decisions, determine means of achieving goals, and perform tasks and goals autonomously. This can be quite confusing, especially for those with a limited technical understanding of AI.

The assumed “autonomy” of AI systems suggests that AI-based systems are capable of “reasoning,” “deciding” and “behaving,” while humans may have limited or no control over such “decision-making.” These assumptions pose challenges across various fields of law and regulatory frameworks, including tort liability, consumer protection, transparency regulation, etc. For patent law, the assumed “autonomy” of AI raises distinct issues. One might assume that the act of inventing is simply handed over to AI systems, and that AI systems are inventing “by themselves.” Consequently, the allocation of patent rights in “AI-induced” inventions to a human user may no longer be justified. This issue has been at the heart of “DABUS” cases litigated worldwide. Currently, the prevailing position across court decisions is that only a human being can be designated as an inventor. However, it should still be clarified whether AI systems are capable of inventing “autonomously.”

In everyday discourse and technical literature on AI, the terms “autonomy” and “automation” are often used interchangeably. However, there is a significant conceptual and technical difference. While no universally accepted definition of autonomy exists, it is usually associated with self-governance and self-determination, the existence and the ability to exercise free will concerning own decision-making and behaviour. AI systems are not autonomous in the sense that they cannot “decide” to violate human-imposed constraints – nor can they come up with information that does not pre-exist in, or cannot be derived, from data.

“Automation” means that a task can be carried out without direct human intervention during its implementation. However, to do so, the computational process needs to be configured and set up by humans in the first place. AI systems can carry out computations in an automated way – that is without direct human participation during the algorithm implementation. In doing so, they can automate operations such as data and information processing. Even though randomisation is applied extensively in machine learning techniques, machine learning systems are still deterministic in the sense that for exactly the same input and under the same conditions, they generate the same output. The complexity of the systems/computational models and the use of randomisation can lead to output variability and cause surprising effects on AI users. Besides, the interaction with the real world perceived through the sensors might cause the loss of control over an AI system by its designer or user, but the lack of control would be attributed to the environment’s unpredictability and not to a computer’s capacity for “self-determination.”

Commentators who have argued for revolutionising patent law tend to presume the emergence of a “standalone” agency – an “inventive genie” – in AI systems. As explained earlier, I view AI techniques rather as computational artefacts and problem-solving tools. This does not mean that no changes within the patent system might be necessary. However, to understand what changes should be implemented, we need a better understanding of the implications of AI technology for innovation processes, on the one hand, and for the goals and functions of patent law, on the other hand. Based on such an assessment, we would be better placed to decide whether granting 20 years of patent protection is the best way to incentivize innovation in the context of increasingly automated technological problem-solving.

Dr Daria Kim is a Senior Research Fellow at Max Planck Institute for Innovation and Competition.

Edited by WENG RONG

PRINT

PRINT CLOSE

CLOSE